Working Notes: a commonplace notebook for recording & exploring ideas.

Home. Site Map. Subscribe. More at expLog.

Maintaining a work log with records and experiences of applying AI agents, autocomplete and other mechanisms as I work through my day. Using this to think through how to leverage AI for building, different patterns and ways I've applied AI or seen people applied it -- and thinking through both tools and models I wish existed for this.

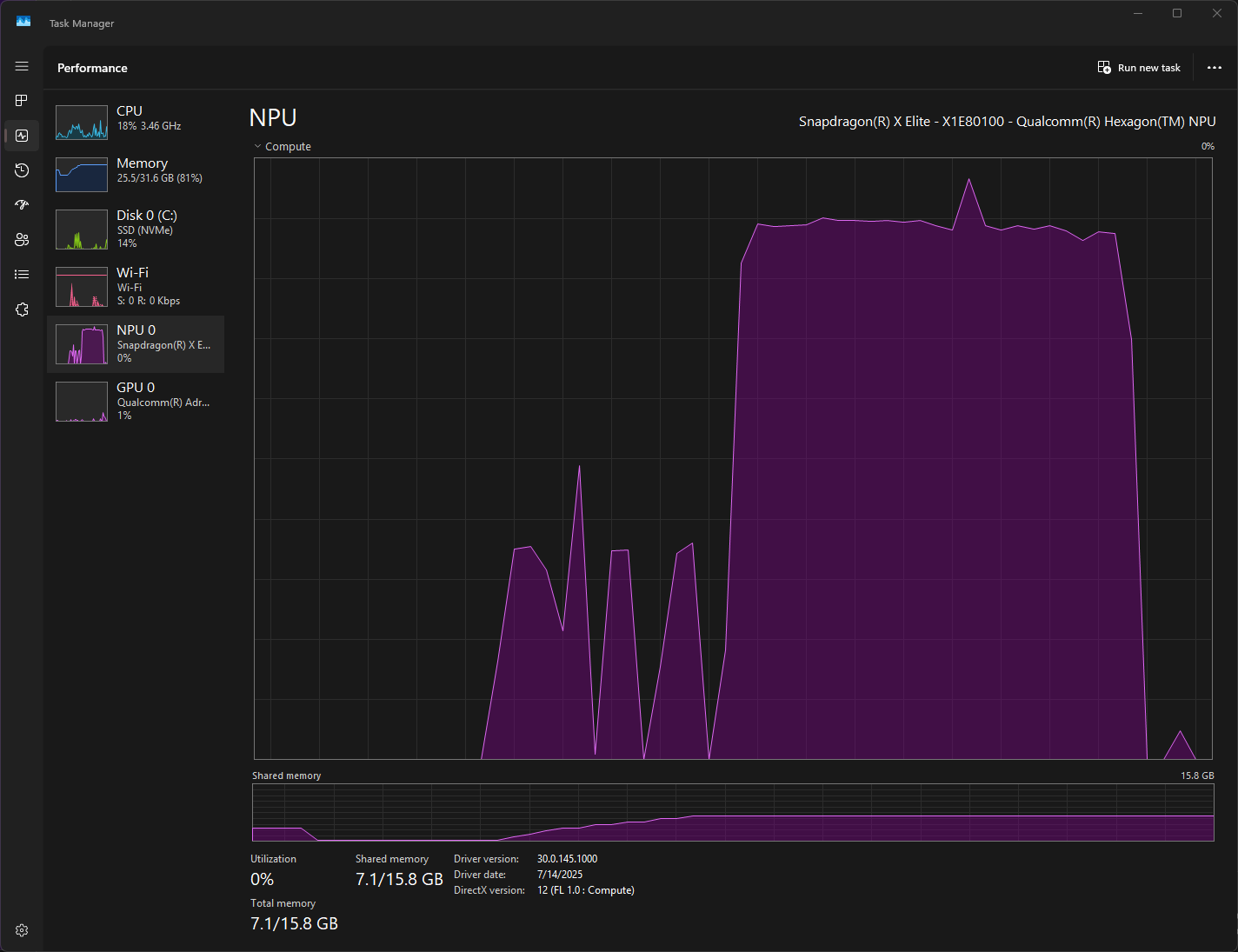

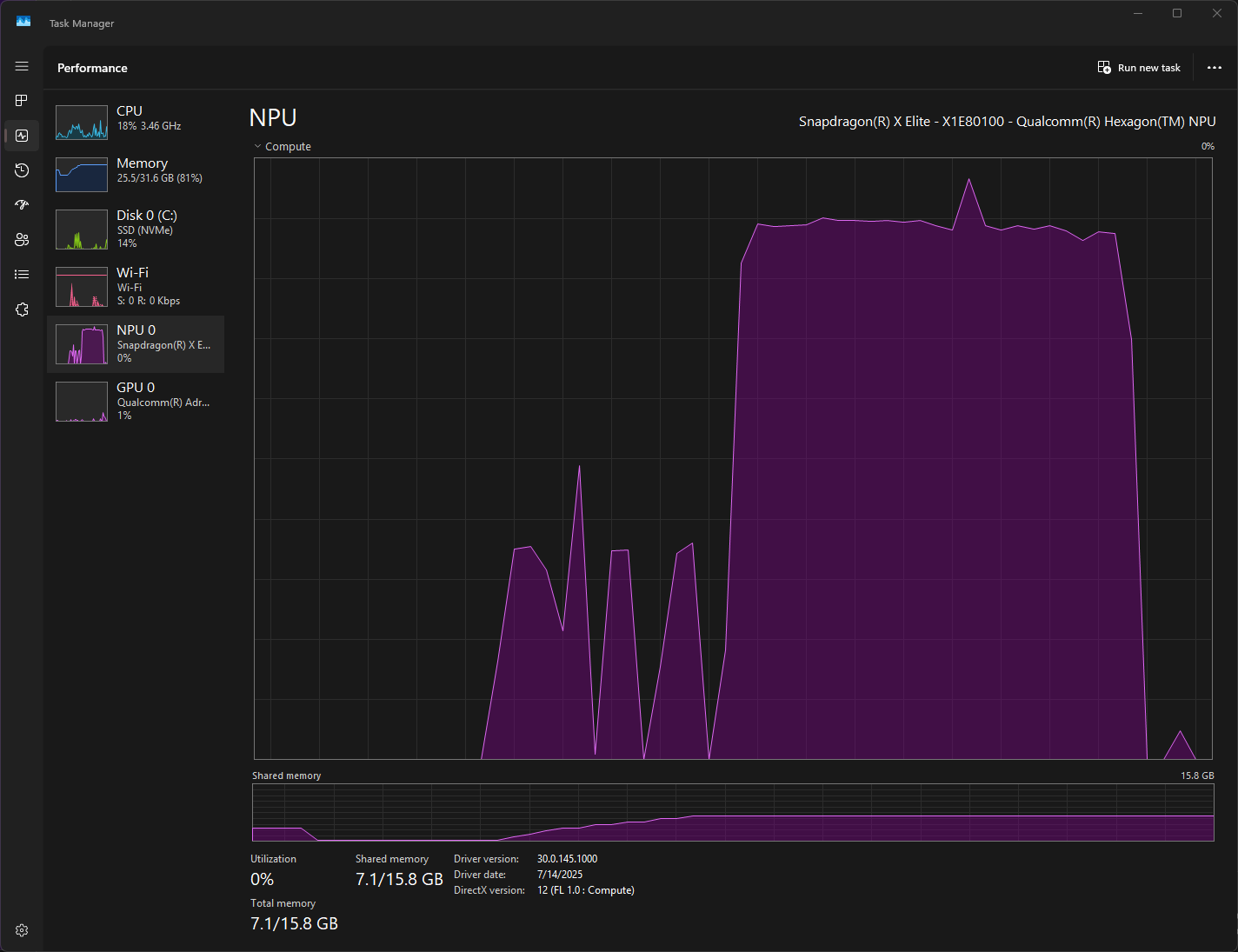

Starting to think through how I'd revisit my current workflows with easy access to model of different shapes and forms:

there are several different constraints to consider

some design decisions that seem obvious

things to watch out for

upcoming projects

misc

ip route show in wsl to check the ip address that windows services run onfoundry service commands to tweak and set the port the service was running onncat to port forward / bind the address to 0.0.0.0 to be able to access the server:

ncat -l 0.0.0.0 1234 --sh-exec "ncat 127.0.0.1 1234" --keep-open(gptel-make-openai "Foundry"

:host "172.29.80.1:1234"

:endpoint "/v1/chat/completions"

:protocol "http"

:stream t

:models '(qwen2.5-7b-instruct-qnn-npu:1))

gptel-diagnostic buffers along the way because they show the request that failed.

I decided to try and both implement things manually and vibe-code by relying on agents; and very quickly I found myself leaning on the agent where I didn't know the APIs by hand.

18.00 Thinking through the appropriate context for terminal completion:

For implementation,

17.30 AI Code Interface seemed a little bit rough at the moment, but very promising. My current stack to use LLMs seems unfortunately fragile, and I'm even beginning to find emacs heavier than I would like. The idea that LLMs can accelerate me that much is extremely tempting, but I still haven't been able to put this into real practice. I need to tackle this with more curiousity and less expectations, otherwise it becomes fairly frustrating.

On a positive note, the vterm extension for emacs -- something I hadn't tried before -- is pretty amazing; I'm actually finding it tempting to just use emacs for managing terminals for day to day work.

I think the only way to be productive is to build my own tools. I need to break this into two pieces:

The other set of tools I'd like to build involve controlling windows directly at the desktop layer, as the most general/self-learning environment possible.

I'm sure I'll end up post-training and training my own models at some point, just not there yet.

15.00 Second attempt at this, trying out programming with codex in a coffee shop. Something I need to get used to is figuring out what to do / how to work while the agent is doing the work here. I need to do less work directly, but it's not clear if this is faster than I would be with direct, partially augmented execution.

Surprisingly enough, the limiting factors feel like the speed at which the agent is thinking, and managing the output of the agent / more visibility into the agent's changes.

I suspect I gave codex far too much to do when I asked it to add auto sort/format support, it seems to be doing something extremely complicated and using far too many tokens. It's also hard to get into flow because it's extremely interrupt based -- I have to keep an eye on codex and can't disconnect entirely, but I'm also not bandwidth constrained.

Writing while the agent programs seems reasonable, but also like a waste of time. I suspect I'll try to do a round of editing and cleanup at the end. Looking at the actual code generated, I'm somewhat frustrated: tentatively because it doesn't match my programming taste particularly well.

I'd like to figure out a way to use this locally / in emacs as an assistant or an oracle instead of an agent.

Asking chatgpt pointed me to ai-code-interface which seems fairly promising in the mechanism of interacting with agents.

Funnily enough, as I switch back to coding by hand / some form of interactive/augmented programming my first instinct is to make a tmux wrapper class for easier tmux interaction, a result of reading openai's approach. Perhaps I can lean on agents to generate a first attempt completely independently to give me some sense of how things could work. I already do this, but haven't explicitly leaned on this as an approach yet.

10.00 Started with claude code, but I became annoyed fairly quickly with context claude was missing and failing on. Then I moved to codex, and that actually did some work faster than I would have, leading me to try and apply agents more aggressively.

Some things I have to calibrate myself on:

I definitely think claude/codex would benefit a lot from relying on tmux or building similar functionality in so that it's much easier for the models to show what they're doing and that the results work.

I'm also fairly surprised none of these interactive modes have started triggering a webserver ui to help coordinate the behavior of the editor while retaining control of the shell, because the interaction with the agent is out-of-band instead of inline.

Brainstorming different ways to apply AI in my daily life

I would much rather have Clippy than an agent to delegate to and then validate the work after: that needs a very different set up than I'm currently used to.

Ideally I'd use a visual agent to read whatever I'm doing and suggest actions/completions, integrating at the level of keyboard and mouse: the OS should have layers that integrate

As a first step, I can do this at the terminal level instead, with tmux: relying on tmux's buffers, etc to be able to read active screens

settling on djn as the program name, as a reference to djinn; I'll prototype in Python for simplicity; and drop down to C wherever I need to for a very portable solution.

I realized the way I use AI has somewhat ossified, and decided to think through my current experiences and friction points with all the tools I've used, brainstorm a little bit about how I'd build my own tools and think through the prototypes I'd like to build over time. This should complement the notes I'd been taking in the log for applying AI in my daily work, but somehow the more unstructured weekly notes work better.

The primary way I still use AI is through chat interfaces, and generally for active programming:

I would like to use LLMs much more aggressively, particularly for tasks involving repetitive exploration, navigating APIs, dealing with meetings, chat threads and other notification based systems but have been struggling with ergonomics significantly: it's much faster for me to directly engage, read, type with confidence than deal with waiting for the LLM to respond to my requests, or find a way to pipe the data to the LLM.

Taking stock of the different ways to apply AI for development that I've tried so far, thinking through where things can go, and exploring ideas for what I wish existed.

Trying to use Gemini for cleaning up the markdown files used in this notebook: it got stuck in an infinite loop and used a ridiculous amount of tokens reading all the files.

All of Gemini, Claude and Codex aren't very CLI friendly -- they're fancier tools that rely on access to tools in the terminal, but don't necessarily integrate well with other tools. I have a lot of ideas to implement here.

The thing I have to watch out for is getting frustrated when AI agents aren't as magical as I'd like to be and have reasonable expectations not driven by LinkedIn.

Claude generated my Github actions to publish this site in 2 shots, which is much faster than the number of times it generally takes me to get it right.

sudo rm -r ~/.nvm/versions/node/v22.14.0

npm cache clean --force

sudo npm cache clean --force

npm install -g @anthropic-ai/claude-code --verbose

— Kunal